Kubernetes networking is flat by default , any pod can talk to any other pod across namespaces. This openness is dangerous in production.

That’s where NetworkPolicies come in a critical tool to control who can talk to whom, at what port and protocol level.

But here's the catch: NetworkPolicies only work if your CNI (Container Network Interface) plugin supports them , like Calico or Cilium.

Why Kubernetes Networking Needs Boundaries

Without controls:

Pods across teams can communicate without restriction.

Misconfigurations in one app can affect others.

It violates zero trust and compliance models like PCI-DSS, ISO 27001.

Why, Where, and When to Use NetworkPolicies

Use NetworkPolicies if you:

Run multi-tenant clusters (teams/projects in different namespaces).

Need compliance (financial, healthcare, government).

Adopt a Zero Trust model (no implicit trust across services).

Want blast radius reduction (limit lateral traffic during compromise).

What If You Don’t?

Without NetworkPolicies:

Any pod can port-scan others — even across namespaces.

Malicious insiders or misconfigured pods can access sensitive services.

Lateral movement becomes easy — one breach spreads fast.

Example Risk Scenario:

frontendpod compromised via log4j exploitPod scans and connects to

paymentsservice in another namespaceAccesses internal APIs or customer data — no policy to stop it

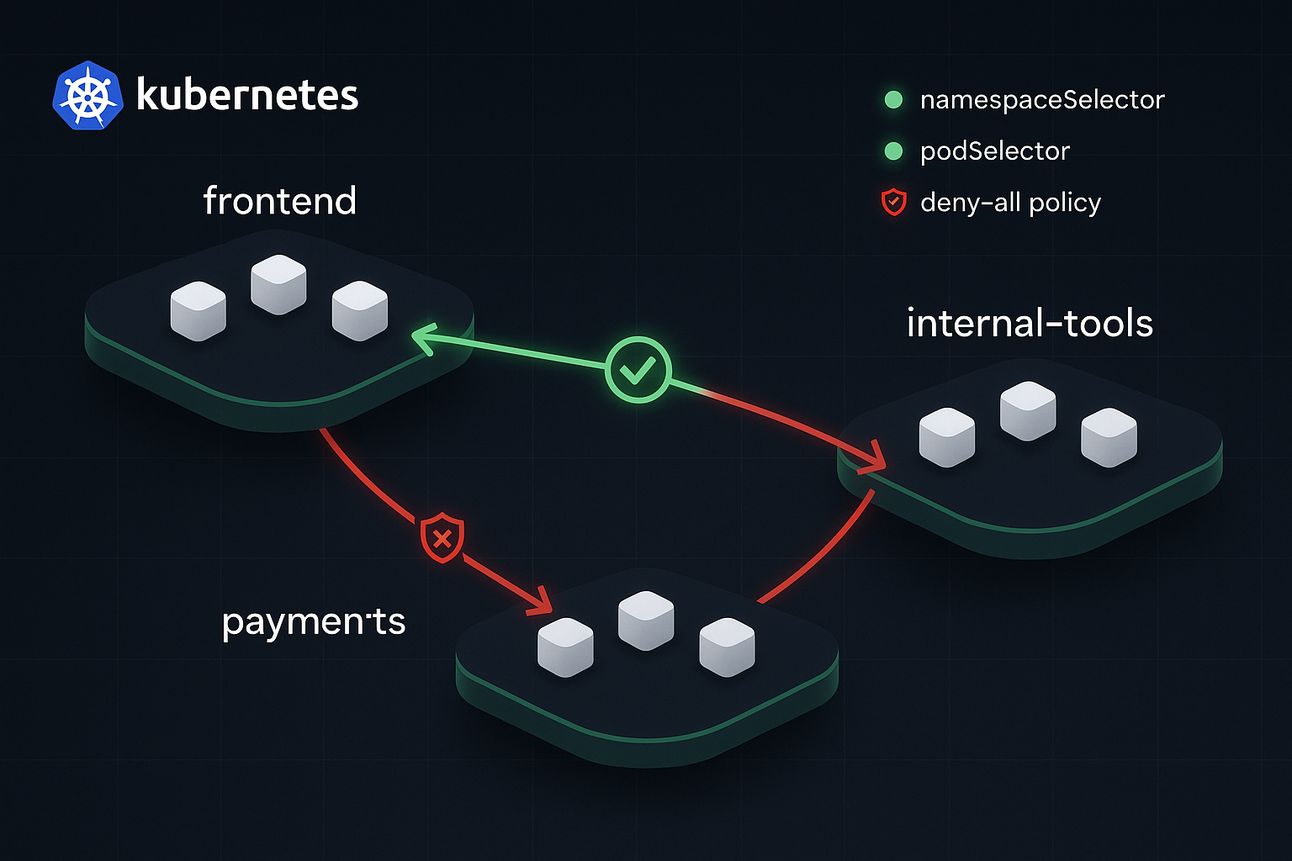

Real Case Study: Frontend → Payments

Setup:

Two namespaces:

frontendandpaymentsPayment service should only be accessed by a specific API pod in frontend

Before:

No NetworkPolicy: any pod in

frontendcan access any pod inpaymentsDefault Kubernetes behavior = open communication

# From any pod in frontend

curl https://payment-service.payments.svc.cluster.local

# Successful, even if it's not an API pod

After:

Only

podSelector: role=api-clientinfrontendANDNamespace labeled as

team=frontendCan access

payment-serviceinpaymentsnamespace on port 443

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-frontend-to-payment

namespace: payments

spec:

podSelector:

matchLabels:

app: payment-service

ingress:

- from:

- namespaceSelector:

matchLabels:

team: frontend

podSelector:

matchLabels:

role: api-client

ports:

- protocol: TCP

port: 443

Namespace Label

apiVersion: v1

kind: Namespace

metadata:

name: frontend

labels:

team: frontend

How to Apply the Solution

1. Start Minimal: Deny All

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-all

namespace: payments

spec:

podSelector: {}

policyTypes:

- Ingress

2. Selective Allow

Use

namespaceSelector,podSelector,portfields to define exact allow rules

3. CI/CD Automation Patterns

Version NetworkPolicies as YAML in Git

Deploy using Helm or Kustomize

Validate using

kubectl diff,kubeval,conftest

Observability & Debugging

Tools:

Netshoot Pod – test

curl,dig,telnetfrom pod levelCalico Flow Logs – see allowed/denied traffic

Cilium Hubble – visualize flow between namespaces live

kubectl run test --rm -it --image=nicolaka/netshoot -n frontend -- bash

curl https://payment-service.payments.svc.cluster.local

Alternative Solutions

Policy Enforcement:

OPA Gatekeeper – enforce rules like “must have deny-all”

Kyverno – policy-as-code for NetworkPolicy enforcement in CI

Layer 7 Alternatives:

Istio / Linkerd – use mTLS + service-level policies

Cilium Cluster-Wide Policies – advanced L3-L7 policies

Are These Solutions Recommended?

Yes, if:

You run multi-tenant or prod workloads

You need compliance (PCI, ISO)

You care about security observability & control

Maybe not, if:

You’re running a dev-only cluster

Single team with trusted workloads

Final Checklist Before You Apply

Is your CNI (e.g., Calico, Cilium) installed and policy-aware?

Are all namespaces labeled?

Is a default deny-all policy in place?

Are ingress and egress rules scoped per service?

Are your policies version-controlled and tested in CI?

NetworkPolicies = Firewalls inside your Kubernetes cluster.

They're simple but powerful — especially when combined with:

Clear labels

Policy-as-code

Proper tooling

Secure your namespaces. Deny by default. Allow only what’s necessary.

Conclusion: Enforcing Secure Namespace Communication with Kubernetes NetworkPolicies

Kubernetes NetworkPolicies are a foundational building block for network security, zero trust enforcement, and multi-tenant cluster isolation. When applied correctly—using tools like namespaceSelector, podSelector, and default deny rules—they transform your cluster from an open mesh to a tightly scoped, auditable, and compliant environment.

By implementing NetworkPolicies:

You reduce the blast radius of breaches

You enforce least privilege access between microservices

You meet compliance mandates like PCI-DSS, ISO 27001, and RBI

You establish a policy-as-code culture with CI/CD and GitOps

Whether you're operating in fintech, e-commerce, healthcare, or a multi-team internal platform—securing cross-namespace communication is non-negotiable.

Start simple: deny by default, allow only what’s essential, and observe flows using tools like Cilium Hubble or Calico Flow Logs.

Combine this with Kyverno or OPA Gatekeeper to automate enforcement, and you’ll build a production-grade Kubernetes security posture from the ground up.

Secure Kubernetes is not a feature — it’s a discipline.

Let NetworkPolicies be your first line of defense.